Built Secure for Behavioral Health

1. Leadership Rooted in Cybersecurity and Online Safety

Founder & CEO Ross Young brings over 15 years of leadership in cybersecurity and ethical AI. Prior to founding Clinically AI, Ross served as the EVP of North America of a pioneering AI safety platform for K–12 education. In that role he helped lead global product strategy and North American operations. This platform used natural language processing and AI to detect early signs of suicide, school violence, sexual abuse, and substance use disorder in real time. In order to do so, they had to comply with the most strict cybersecurity standards for multiple countries. Today, that system protects over 23 million students across the globe.

Clinically AI’s experience blends deep technical proficiency with an uncompromising commitment to safety and privacy. This foundation of AI built to protect the most vulnerable now drives Clinically AI’s mission in behavioral health. We’ve built a platform engineered from the ground up to be secure, responsible, and aligned with clinical realities.

2. Secure Architecture & Zero Data Retention (ZDR)

Security is not a feature of our platform—it’s a foundation. Clinically AI is designed to meet and exceed regulatory and ethical standards across every layer:

SOC 2 Certified:

Our systems, operations, and internal controls are independently audited for security, availability, and confidentiality. During this process, we conducted penetration testing to our systems.

HIPAA-Compliant Infrastructure:

We ensure all protected health information (PHI) is handled in accordance with HIPAA guidelines.

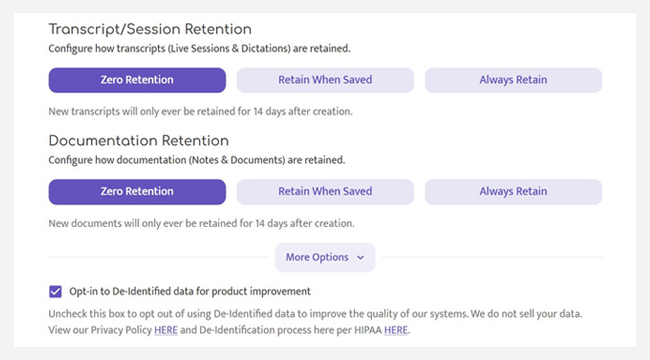

Zero Data Retention (ZDR):

No session data—including audio—is stored after processing. Audio is instantly transcribed and destroyed. The resulting text can be optionally retained based on the organization’s preferences in a secure, private cloud environment.

Model Isolation:

Our models are not trained on customer data and cannot access PHI after interaction. Once the AI completes its task, it no longer has access to the information.

This means our models cannot be poisoned, compromised, or exposed through traditional data leakage vectors. Clinically AI is built for zero trust environments, giving administrators total control and full transparency.

3. De-Identification via Safe Harbor Method

When training or fine-tuning models, we never use customer data. Instead, we rely on a separate, rigorously managed dataset composed of de-identified content. This data is processed using the Safe Harbor Method, the gold standard in healthcare data protection and the most widely accepted method for de-identification under HIPAA. This method systematically removes 18 key identifiers—including names, geographic locations, dates directly tied to an individual, and biometric identifiers—ensuring that the remaining data cannot reasonably be linked to a specific person. We’ve published our de-identification, process, here, which has been reviewed by legal and HIPAA experts.

This approach ensures that patient safety and privacy are never at risk. The Safe Harbor method is the trusted standard across the medical industry and provides a secure path for model improvement without introducing breach risks or compliance concerns.

Additionally, we only use de-identified data after receiving explicit approval from the contributing customer organization, and only for the agreed-upon scope of improvement.

4. Clinician-in-the-Loop Verification

Artificial intelligence must be accountable to clinical standards. Every model used in Clinically AI undergoes Clinician-in-the-Loop (CITL) verification. This is not a superficial check—it’s a structured process involving:

Expert review of model outputs by licensed behavioral health professionals

Scenario testing across various clinical contexts

Pre-release testing for hallucination, inconsistency, and bias detection

Comparison to clinical documentation benchmarks set by organizations, payers, and accrediting bodies

We do not release models until they have been fully verified against the clinical tasks they are meant to support. Whether the use case involves progress note generation, fidelity checking, or prescription guidance, we ensure the AI supports—not disrupts—your standards of care.

This process also allows us to introduce cutting-edge models—including those with advanced reasoning and contextual awareness—into the behavioral health ecosystem without compromising safety or alignment.

5. AI That Supports, Never Supersedes Clinicians

Clinicians are the ultimate decision-makers. Clinically AI is designed to support that role—not replace it. Educated, trained, and licensed clinician judgement should always supersede the AI reasoning, which is why the tool enables the clinician to interface with the AI. Our system enforces structured documentation and instruction through organizationdefined templates, but clinicians retain the ability to:

Adjust tone, phrasing, and language style to reflect their voice

Tell the AI how to use contextual information

Provide clinical judgement and observations to the AI

Ask the AI for alternative phrasing or content adjustments that reflect the nuance of care

Importantly, clinicians cannot modify organizational prompts or compliance logic. This protects the integrity of organizational standards and ensures consistent documentation quality across providers.

We believe this collaborative approach is the right way to implement AI in behavioral health: augment the professional, never override their insight.

6. Standards Set by Organizations, Not Vendors

Every behavioral health agency operates within a unique constellation of payer contracts, state regulations, licensing requirements, accrediting bodies, and clinical philosophies. At Clinically AI, we respect and support that complexity.

Instead of imposing a rigid set of documentation rules, we enable organizations to define their gold standards:

Required fields, structures, length, and template sections to generate

Terminology, regulatory requirements, and tone

Fidelity expectations and documentation scoring logic

Rules by service line, modality, or funding stream

These configurations are implemented across our platform to ensure that every note aligns with your expectations—not ours. Our AI becomes an extension of your policies, not a substitute for them. This enables your organization to scale your requirements in a granular way.

7. Multi-Model Infrastructure Built for Task-Specific Accuracy

One model does not fit all in healthcare. That’s why Clinically AI employs a multi-model architecture designed to leverage the strengths of different AI models for specific clinical tasks.

For example:

Google MedLM is highly effective for tasks requiring medical terminology, diagnostic vocabulary, and prescription-based support.

Clinically AI proprietary models, fine-tuned on behavioral health narratives and therapeutic interventions, excel at tasks like progress note generation, group documentation, and treatment plan alignment

Fine Tuned Reasoning Models - Trillions of dollars have been invested by the latest AI companies such as OpenAI, Anthropic, Mistral, Groq, Grok, and others. We leverage some of their models in a HIPAA compliant environment and with Zero Data Retention options. This enables us to bring a broad range of models to our customers for an array of tasks

GraphRAG - Traditional RAG (Retrieval Augmented Generation) with Vector databases can be slow and miss context. We have deployed the latest AI Graphing systems to ensure that multi-modal agentic infrastructure stays consistent and provides accurate and consistent outputs based on customer prompts and data.

Each model in our environment is HIPAA-compliant and operates within strict usage boundaries defined by our ZDR and compliance infrastructure. We route requests to the model best suited to the task—ensuring speed, accuracy, and safety.

8. U.S.-Based Development and Data Sovereignty Commitment

At Clinically AI, all development and operations are based in the United States. This includes our engineering, product development, clinical validation, and support teams. Unlike offshore competitors, we maintain full jurisdictional control and regulatory alignment within U.S. borders.

All customer data remains securely stored in U.S.-based cloud environments, and our architecture fully adheres to data sovereignty laws—ensuring that no data is transferred across borders or subjected to foreign access. This domestic infrastructure guarantees:

Full compliance with HIPAA, FERPA, and U.S. data protection statutes

Elimination of international access risk

Transparent accountability within the U.S. legal framework

For organizations that serve vulnerable populations and operate under strict state and federal oversight, this matters. You deserve an AI partner who meets the highest data security expectations—and keeps it close to home.

This precision-driven architecture allows organizations to use Clinically AI confidently across a broad range of functions without compromising compliance or performance.